Attacks from every corner

Google’s new Gemini Nano Banana AI specializes in photo editing, yet it reportedly carries significant risks. Chief Scientist at Conductify AI Nguyen Hong Phuc condemns Google’s decision to leave the tool “uncensored” as an ethical regression.

He warns that relaxing safety barriers facilitate the creation of hyper-realistic forgeries, including sensitive content and celebrity likenesses. These fabrications are so convincing that even experts struggle to distinguish them, amplifying threats of fraud, political disinformation, and defamation.

Furthermore, with dozens of other AI photo-editing tools currently taking the internet by storm, the scourge of deepfake fraud shows no signs of stopping. Statistics from security organizations reveal that deepfake technology is driving targeted phishing campaigns against high-value individuals, particularly business leaders.

In 2024, there were reportedly 140,000-150,000 such cases globally, with 75 percent targeting CEOs and C-suite executives. It is estimated that deepfakes could drive global economic losses up by 32 percent, reaching approximately US$40 billion annually by 2027.

Ngo Minh Hieu, Director of the Anti-Phishing Project at the National Cyber Security Association warned that AI accelerates fraud twenty-fold through automated, real-time interactions.

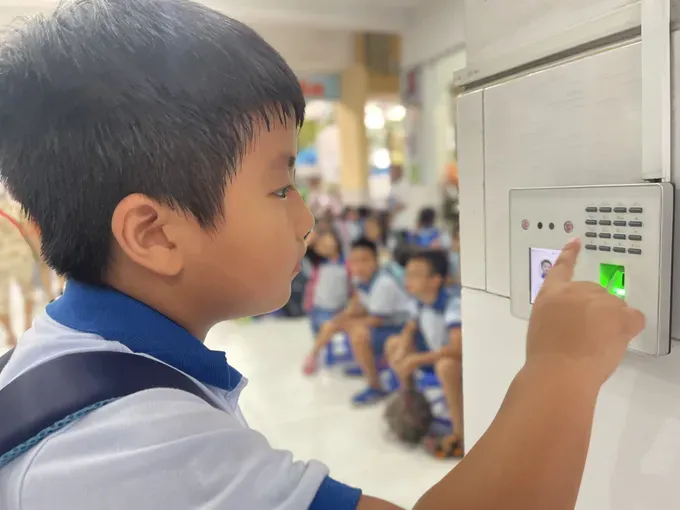

The most sophisticated threat is the "Man-in-the-Middle" attack. Here, hackers intercept video authentication streams such as facial scans and insert pre-prepared deepfakes to replace the user’s live data. This technique effectively bypasses biometric checks, leaving even the fortified security systems of banks and financial institutions vulnerable to deception.

Better data management

As AI becomes ubiquitous, security and privacy risks escalate significantly. Data stored and processed on remote servers becomes an attractive target for hackers and cybercrime syndicates. According to data from the National Cyber Security Association, in just the second quarter of 2025, the number of cyberattacks using AI surged by 62 percent, causing global damages estimated at $18 billion.

Experts argue that data protection is a matter of survival. Yet, data collection and trading still occur openly on many black market platforms for as little as $20 a month. Cybercriminals can use tools that function similarly to language models but are customized for criminal purposes. These tools are capable of generating malicious code and even bypassing antivirus software.

In Vietnam, Decree No. 13/2023/ND-CP (effective from April 17, 2023) regulates personal data protection. Additionally, the Law on Personal Data Protection is expected to take effect on January 1, 2026, opening a stronger legal mechanism to respond to data leaks and abuse.

However, according to the National Cyber Security Association, implementation effectiveness still needs to be fortified on the three pillars of raising public awareness, increasing corporate responsibility, and improving the processing capacity of regulatory agencies. Beyond technical measures, every individual needs to build the ability to identify abnormal signs and proactively protect themselves from dangerous digital interactions.

Security firm Kaspersky warns of the rise of “Dark AI” in the Asia-Pacific, which are LLMs operating without safety controls to facilitate fraud and cyberattacks. Sergey Lozhkin, a lead researcher at Kaspersky, highlights the emergence of “Black Hat GPT” models: AI tools specifically tweaked for illicit purposes like creating malware, generating deepfakes, and drafting persuasive phishing emails.

To combat this, experts urge businesses to deploy next-generation security solutions capable of detecting AI-generated malware. Organizations must prioritize data preservation and apply real-time tracking to monitor AI-driven vulnerabilities. Furthermore, mitigating “shadow AI” risks requires strengthening access controls, enhancing employee training, and establishing security operations centers for rapid incident response.